Web Scraper

- Browser-based tool

- Easy-to-use interface

- Exports data to CSV/JSON

- Great for beginners

ParseHub

- Visual data extraction

- Handles complex websites

- Cloud-based platform

- Supports multiple formats

Scrapy

- Python-based framework

- Highly customizable

- Open-source and free

- Great for large-scale scraping

Apify is a popular platform for web scraping and automation, but it’s not a one-size-fits-all solution.

Depending on your goals—whether it’s data extraction, social media scraping, or large-scale automation—there might be other tools that better fit your specific needs, budget, or technical expertise.

Luckily, there are plenty of excellent alternatives that offer unique features, from no-code options for beginners to advanced frameworks for developers.

Let’s explore the top Apify alternatives, breaking down their features, pros, and cons so you can pick the one that’s perfect for your project. Let’s dive in!

10 Best Apify Alternatives 2025: The Top Options To Explore

Below, I have mentioned the top 10 Apify Alternatives:

1. Mixnode

Mixnode is a dynamic platform designed to extract and analyze data from the internet with impressive speed, flexibility, and massive scalability.

It offers a wide range of capabilities that enable efficient data extraction and comprehensive analysis from diverse online sources.

At its core, Mixnode excels in its ability to retrieve data from the web swiftly. With its high-performance infrastructure, this platform ensures rapid extraction of desired information, minimizing the time and effort required for data acquisition.

Whether you need specific data points or comprehensive datasets, Mixnode’s agility ensures a seamless and efficient data retrieval process.

Flexibility is another key strength of Mixnode. It offers a versatile toolkit that empowers users to tailor their data extraction needs according to their specific requirements.

From structured data to unstructured content, Mixnode provides the necessary tools to extract and transform information from various web sources, enabling users to obtain valuable insights and make data-driven decisions.

2. Web Scraper

Web Scraper is a highly acclaimed web scraping extension, widely recognized as the most popular tool of its kind.

With Web Scraper, you can initiate scraping activities within minutes, thanks to its user-friendly interface and seamless setup process.

Additionally, the Cloud Scraper feature enables task automation, eliminating the need for software downloads or coding expertise.

As the go-to choice for web scraping, Web Scraper offers a host of benefits for users seeking to extract valuable data from websites.

Its popularity stems from its simplicity and accessibility. Whether you’re a beginner or an experienced user, Web Scraper’s intuitive interface allows you to quickly get started with your scraping endeavors.

You don’t have to spend valuable time and effort navigating complex software or deciphering intricate coding languages.

Web Scraper’s key feature, the Cloud Scraper, takes automation to the next level. By leveraging this powerful functionality, you can streamline and expedite your scraping tasks.

With the Cloud Scraper, there is no need to install any software on your device, freeing up valuable storage space. Moreover, you can say goodbye to the intricacies of coding.

Web Scraper eliminates the requirement for manual coding, allowing users to automate tasks without any technical expertise.

3. Import.io

Import.io is a cutting-edge web-based platform that empowers users with the ability to harness the power of machine-readable web data.

With Import.io’s comprehensive suite of tools, you can effortlessly create APIs or crawl entire websites with unparalleled speed and efficiency, all without the need for coding.

At its core, Import.io revolutionizes the way users interact with web data. Traditional methods of data extraction and analysis often involve time-consuming manual processes and intricate coding.

However, Import.io streamlines this entire workflow, enabling users to extract and utilize valuable data from the web in a fraction of the time.

One of the standout features of Import.io is its capability to create APIs without coding. APIs, or Application Programming Interfaces, are instrumental in enabling seamless data integration and automation.

With Import.io, you can effortlessly transform web data into APIs, allowing for smooth and efficient data exchange between different systems and applications.

This opens up a world of possibilities for developers, data scientists, and businesses seeking to leverage web data for their specific needs.

4. UiPath

UiPath is a powerful automation tool that offers a comprehensive set of features to automate web and desktop applications.

It provides a free, fully-featured, and highly extensible platform, making it an ideal choice for individuals, small professional teams, educational institutions, and training purposes.

At its core, UiPath empowers users to automate various tasks and processes, enhancing productivity and efficiency.

Whether it’s automating repetitive actions in a web application or streamlining workflows in a desktop environment, UiPath’s versatile capabilities cater to a wide range of automation needs.

One of the key advantages of UiPath is its user-friendly interface, which simplifies the automation process. The platform provides a visual workflow designer, allowing users to create automation workflows without the need for extensive coding knowledge.

Through a drag-and-drop approach, users can easily assemble automation sequences by combining activities, such as data extraction, input automation, and decision-making logic.

5. ParseHub

ParseHub is a powerful web scraping tool designed specifically to navigate and extract data from the modern web landscape. One of the standout features of ParseHub is its ability to extract data from virtually anywhere on the web.

Whether you’re dealing with single-page applications, multi-page applications, or any other modern web technology, ParseHub is equipped to handle the challenge.

It effortlessly navigates through dynamic web pages, AJAX-driven sites, and other advanced web technologies, ensuring that no data remains out of reach.

The elements at ParseHub are commonly used in modern web development to enhance user experience and dynamically load content.

However, they can pose challenges for traditional scraping tools. ParseHub’s advanced algorithms and robust infrastructure seamlessly handle these complexities, ensuring accurate and comprehensive data extraction.

ParseHub provides a user-friendly interface and a visual point-and-click system for creating scraping projects. You can easily define the data you need by selecting the elements on the webpage, such as text, images, links, or tables.

This intuitive approach eliminates the need for complex coding or manual navigation through HTML structures. With ParseHub, you can quickly build and modify scraping projects, saving valuable time and effort.

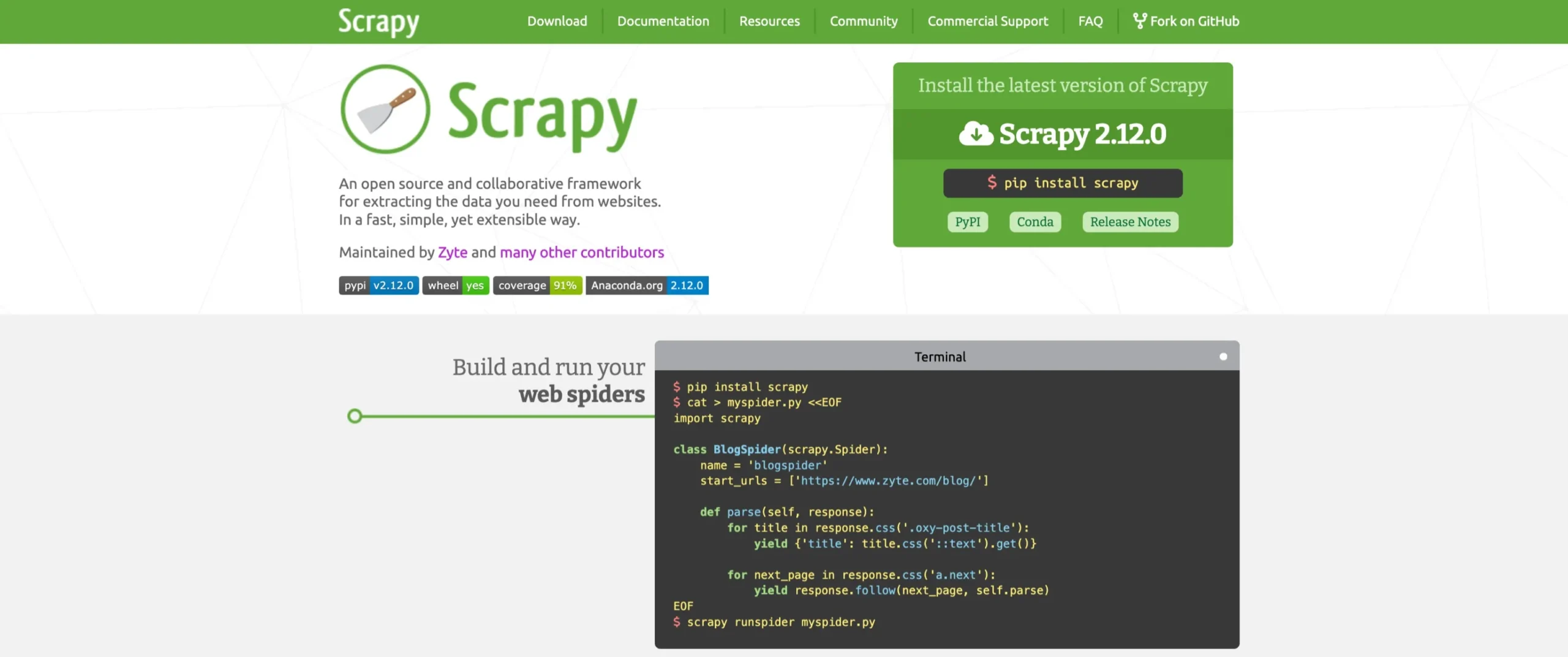

6. Scrapy

Scrapy is a Python-based web-crawling framework renowned for its versatility and open-source nature.

Originally designed for web scraping, Scrapy has evolved to become a powerful tool for extracting data using APIs and functioning as a general-purpose web crawler.

Developed and maintained by Zyte, formerly known as Scrapinghub, Scrapy is widely trusted in the field of web scraping.

At its core, Scrapy provides a comprehensive set of tools and libraries for web crawling and data extraction. It offers a flexible and modular architecture that allows developers to tailor their scraping projects to specific requirements.

With its Python foundation, Scrapy leverages the rich ecosystem and extensive libraries available in the Python programming language, making it a popular choice among developers.

Scrapy’s versatility extends beyond web scraping. The framework seamlessly integrates with APIs, enabling users to extract data from a wide range of sources.

By leveraging APIs, Scrapy can retrieve structured data from social media platforms, web services, and various online databases.

This flexibility makes Scrapy an ideal choice for applications beyond traditional web scraping.

7. UI.Vision RPA

UI.Vision RPA is an open-source task and test automation tool that goes beyond traditional capabilities. As a browser extension, it not only enables web automation but also offers desktop automation functionalities.

One of the key advantages of UI.Vision RPA is its versatility. Traditional automation tools often focus solely on web automation, leaving other aspects of task automation untouched.

However, UI.Vision RPA breaks this barrier by providing the capability to automate tasks both on the web and the desktop.

Whether it’s interacting with web applications, automating form submissions, extracting data from screens, or performing complex RPA workflows, UI.Vision RPA is equipped to handle diverse automation requirements.

UI.Vision RPA’s browser extension nature offers convenience and ease of use. It seamlessly integrates into popular web browsers, allowing users to leverage the full potential of the extension directly within their browsing environment.

This eliminates the need for separate software installations and provides a user-friendly interface for creating and managing automation tasks.

8. Octoparse

Octoparse is a versatile web scraping tool that caters to intermediate users and offers a drag-and-drop interface.

It supports both local and cloud-based scraping, making it flexible for different use cases. Octoparse can handle CAPTCHA and anti-scraping mechanisms, which is essential for modern websites.

While it’s user-friendly, setting up workflows for advanced tasks can be challenging. The pricing structure can also be expensive for small-scale users.

9. PhantomBuster

PhantomBuster is a powerful tool designed to automate tasks like social media data extraction, lead generation, and workflow automation.

It offers pre-built templates for platforms like LinkedIn, Twitter, and Instagram, making it user-friendly for non-coders.

Integration with tools like Zapier allows users to connect PhantomBuster to larger workflows. However, it is more focused on social data and less effective for general web scraping.

Additionally, the subscription pricing can get expensive for scaling projects.

10. WebHarvy

WebHarvy is a point-and-click desktop-based web scraper that requires no coding skills.

It is ideal for users who want to scrape data quickly from static or dynamic websites without complex configurations.

WebHarvy supports advanced features like regex patterns, data formatting, and scheduling, making it versatile for small and medium-sized projects.

However, it is limited to desktop use and doesn’t offer cloud-based scraping, which can be a drawback for large-scale or collaborative projects.

Quick Links:

- Webshare Alternatives: My Top Favourite

- Unleashing the Power of Web Crawler

- Best Web Scraping Proxies

Conclusion: Apify Alternatives 2025

Choosing the best Apify alternative depends on your needs, budget, and technical skills. If you’re a developer seeking full customization, Scrapy is an excellent open-source framework.

For beginners or non-coders, ParseHub and Octoparse offer user-friendly interfaces with no programming required.

If your focus is social media automation and lead generation, PhantomBuster is a great option. For quick and easy desktop-based scraping, WebHarvy is ideal.

Each tool has its strengths and limitations, so evaluate your project size, complexity, and budget to find the perfect match. There’s an option out there for everyone, from casual users to advanced developers.